In the world of machine learning, the variety and quality of training data can make or break a model’s performance. Traditional visual data augmentation — simple flips, rotations, or crops — falls short when it comes to capturing the full diversity of real-world scenarios. Enter the new era of generative AI: Text-to-image models like Stable Diffusion, fine-tuned on domain-specific data, can now produce rich, diverse, and highly realistic images — capturing everything from varying weather conditions to entirely new, hard-to-collect visual scenarios. By training AI with AI-generated images, we unlock a new dimension of robustness, adaptability, and efficiency in ML pipelines.

Introduction

In the field of artificial intelligence, data is the fuel that powers intelligent systems. For image processing tasks, whether it’s medical diagnosis from X-rays, autonomous vehicle navigation, or quality control in manufacturing, the quality and diversity of training data directly impact how well an AI model performs in real-world scenarios. A model trained only on sunny day driving images will struggle when it encounters rain or snow. A medical AI that has only seen one type of X-ray machine’s output might fail when deployed in a hospital with different equipment.

This is where the concept of robustness becomes crucial. A robust AI model can handle variations and unexpected situations easily, maintaining high performance even when faced with data that differs slightly from what it was trained on. The key to building such robust models lies in exposing them to as much diversity as possible during training. That diversity encompasses: different lighting conditions, weather scenarios, object orientations, backgrounds, and countless other variations that exist in the real world.

However, collecting diverse, high-quality datasets is expensive, time-consuming, and sometimes practically impossible. How do you collect thousands of images of rare medical conditions? Or capture every possible weather scenario for autonomous driving? This challenge has traditionally been addressed through data augmentation techniques, but as we’ll explore, we’re now entering an exciting new era.

Simple Data Augmentations: The Traditional Approach

For years, machine learning practitioners have relied on basic data augmentation techniques to artificially expand their datasets and improve model performance. These methods involve applying simple transformations to existing images to create variations that the model can learn from. Common augmentation techniques include:

- Rotation: spinning images by various angles to help models recognize objects regardless of orientation

- Horizontal/vertical flipping: mirroring images to double the dataset size instantly

- Cropping and scaling: zooming in and out to help models focus on different parts of objects

- Colour adjustments: modifying brightness, contrast, or saturation to simulate different lighting conditions

- Adding noise: introducing small random variations to make models more resilient to imperfect input

Research has consistently shown that these augmentations improve model performance. Studies have demonstrated accuracy improvements across various image classification tasks when basic augmentations are applied [1], [2]. The technique works because it forces the model to learn more general features rather than memorizing specific details that might not be present in new, unseen images.

Think of it like teaching someone to recognize stop signs. If you only show them perfectly centred, brightly lit stop signs, they might struggle to identify a stop sign that’s partially obscured or photographed at dusk. By showing them rotated, dimmed, and cropped versions during training, they become more capable at recognizing the essential features that make a stop sign identifiable under various conditions.

However, traditional augmentation has significant limitations. While flipping a photo of a road crossing can create a valid training example, it doesn’t help the model learn to recognize that same crossing in the rain, snow, or different seasons. These simple geometric and colour transformations can’t capture the rich complexity of real-world variations and they’re essentially creating mathematical variations of the same underlying content rather than generating truly new scenarios.

Next Level Augmentation: Enter Generative AI

This is where the revolution begins. What if, instead of just rotating and flipping existing images, we could generate entirely new images that maintain the core content while introducing realistic variations that would be expensive or impossible to collect naturally?

Recent advances in generative AI, particularly text-to-image models, have opened wide possibilities for data augmentation. Models like Stable Diffusion [3], Imagen [4], and Midjourney [5] can now create photorealistic images from text descriptions with remarkable fidelity. But the real game-changer comes when we fine-tune models on specific datasets and use targeted prompts to generate augmented training data.

Understanding Diffusion Models

Latest text-to-image generation relies primarily on diffusion concept [6], which have achieved state-of-the-art results in both image quality and text-image alignment. Understanding their technical foundation is essential for effective deployment in data augmentation pipelines.

Diffusion models work on the principle of iterative noise removal through a learned reverse diffusion process. During training, the model learns to reverse a forward diffusion process that gradually adds Gaussian noise to training images until they become indistinguishable from random noise. The reverse process, controlled by neural networks, progressively removes noise while being guided by text embeddings that describe the desired image content.

The text conditioning mechanism enables precise control over image content and style. Prompts can specify object types, spatial relationships, environmental conditions, lighting scenarios, and stylistic preferences with remarkable accuracy and consistency. This controllability is essential for systematic dataset augmentation where specific variations need to be generated reproducibly.

Fine-tuning for Domain Specificity

While pre-trained models like Stable Diffusion are incredibly capable, they’re trained on broad, general datasets. For specialized applications, fine-tuning becomes essential. This process involves taking a pre-trained model and training it further on a specific dataset relevant to your domain.

For example, if you ask a pretrained model for “a photo of a crosswalk”, it might generate an image from a bird’s-eye view, from the perspective of a car, or in a cartoon style. For an app that helps pedestrians, these images aren’t very useful. You need images specifically from the pedestrian’s point of view. This is where fine-tuning comes in. Fine-tuning is the process of taking a large, pre-trained model and giving it a small, specialized training on your own dataset. By showing the model just a few dozen images of your specific subject, you can teach it to generate new images that match that domain’s unique style, details, and context. Beyond that, fine-tuning captures the general ambience and atmosphere of the source dataset — lighting quality, the characteristic colour palettes, and the overall “feel” of the visual environment.

This fine-tuning process is remarkably data-efficient. While the original model might have been trained on millions of images, effective fine-tuning can often be achieved with just dozens of domain-specific examples.

Prompt-Driven Augmentations

Once you have a fine-tuned model that understands your specific domain, you can use text prompts to generate an incredible diversity of new training data [7]. You’re no longer limited to simple image modifications, you can now create variations that are difficult or time-consuming to collect manually. Some common augmentations include:

- Weather conditions: simulatingdifferent weather conditions like rain, snow, fog, etc.

- Lighting variations: generating the scenes under different lighting conditions like daytime, nighttime, sunset, etc.

- Environments: creating the scenes with the different environment styles like urban environment or rural environment

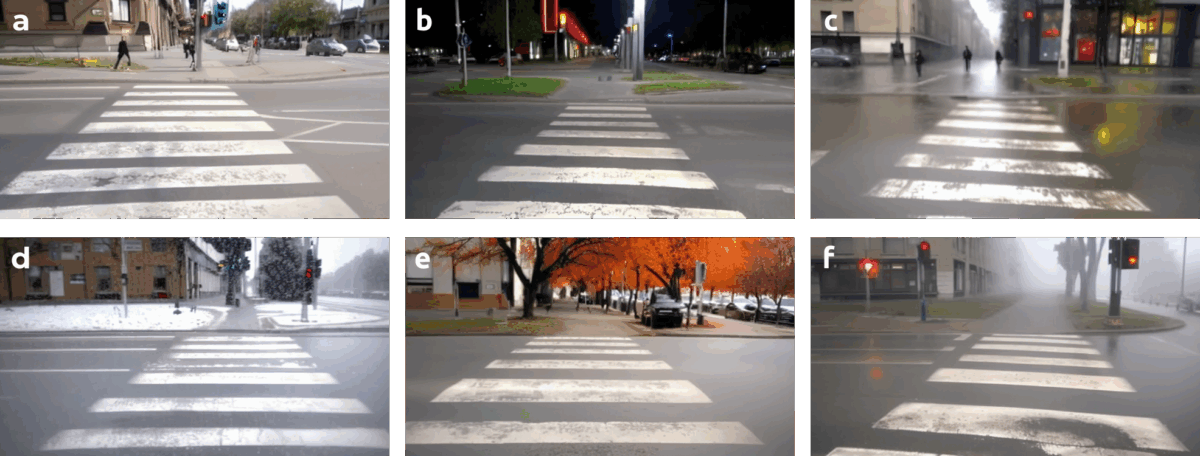

Let’s consider training an AI to assist visually impaired individuals by identifying crosswalks from a smartphone camera. Collecting a comprehensive dataset for this is incredibly challenging. You would need photos of crosswalks in different cities, at all times of day, in every season, and in all weather conditions which might be an enormous task.

However, with a fine-tuned generative model, you can simply ask for the exact scenarios you need to make your model robust:

- “A pedestrian’s perspective of a crosswalk, sunny afternoon“

- “A pedestrian’s perspective of a crosswalk, night conditions“

- “A pedestrian’s perspective of a crosswalk, rainy weather“

- “A pedestrian’s perspective of a crosswalk, snowy weather“

- “A pedestrian’s perspective of a crosswalk, autumn, urban environment“

- “A pedestrian’s perspective of a crosswalk, morning fog“

Suddenly, you have a rich, diverse dataset that covers various scenarios. Some of the examples for various first-person view scenes of crosswalk are shown below.

What’s Next? The Future is a Hybrid Approach

The ability to generate high-quality, targeted, and diverse data on demand is a game-changer. By using fine-tuned diffusion models, we can fill the gaps in our datasets, train models on scenarios that are too expensive or dangerous to collect, and ultimately build more robust, adaptable, and efficient AI systems.

However, this doesn’t mean real-world data is obsolete. The most powerful approach moving forward will almost certainly be a hybrid model. Research is already showing that models trained on a mix of real and high-quality AI-generated images often outperform those trained on either one alone [8]. Real data provides the essential “ground truth”, keeping the model anchored to reality, while synthetic data provides the diversity and coverage of edge cases needed for true robustness.

We are just beginning to scratch the surface of what’s possible. As generative models become even more powerful and controllable, the line between real and synthetic data will blur, unlocking a new level of performance and capability for AI across every industry.

- C. Shorten and T. M. Khoshgoftaar, ‘A survey on Image Data Augmentation for Deep Learning’, Journal of Big Data, vol. 6, no. 1, p. 60, Jul. 2019, doi: 10.1186/s40537-019-0197-0.

- S. Yang, W. Xiao, M. Zhang, S. Guo, J. Zhao, and F. Shen, ‘Image Data Augmentation for Deep Learning: A Survey’, Nov. 05, 2023, arXiv: arXiv:2204.08610. doi: 10.48550/arXiv.2204.08610.

- ‘Stability AI Image Models’, Stability AI. Accessed: Sep. 24, 2025. [Online]. Available: https://stability.ai/stable-image

- ‘Imagen: Text-to-Image Diffusion Models’. Accessed: Sep. 24, 2025. [Online]. Available: https://imagen.research.google/

- ‘Midjourney’, Midjourney. Accessed: Sep. 24, 2025. [Online]. Available: https://www.midjourney.com/home

- R. Rombach, A. Blattmann, D. Lorenz, P. Esser, and B. Ommer, ‘High-resolution image synthesis with latent diffusion models’, in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 10684–10695.

- B. Trabucco, K. Doherty, M. Gurinas, and R. Salakhutdinov, ‘Effective Data Augmentation With Diffusion Models’, May 25, 2023, arXiv: arXiv:2302.07944. doi: 10.48550/arXiv.2302.07944.

- L. Eversberg and J. Lambrecht, ‘Combining Synthetic Images and Deep Active Learning: Data-Efficient Training of an Industrial Object Detection Model’, Journal of Imaging, vol. 10, no. 1, p. 16, Jan. 2024, doi: 10.3390/jimaging10010016.

Author

doc. dr. sc. Krešimir Romić

Text and/or images are partially generated by artificial intelligence